Automated Node Health Monitoring and Remediation#

The ICE ClusterWare ™ software uses the auto remediation service (ARS) to implement automated remediation solutions to common cluster node problems, such as GPU errors, out of memory errors, or mount issues. ARS integrates with Sensu, an open source monitoring solution that processes node health alerts and metrics. ARS identifies the most statistically advisable solution based on the issue or issues reported by Sensu and attempts to resolve the problem without human intervention. Depending on the issue, this can be as simple as running a command or rebooting the node. In many cases the issue is fully resolved after the remediation plan is applied, reducing overhead for the cluster administrator and improving hardware uptime and cluster performance. If the automated solution cannot solve the node's problem, such as a hardware failure that requires replacement, the node is moved into a work queue and cluster administrator attention is required.

ARS is installed by default with the ClusterWare software, but requires some manual configuration to fully enable automated node health tracking and remediation. After initial configuration, all compute nodes with the correct attributes are monitored by ARS. See Configure Automated Remediation Service (ARS) for details.

Note

Enabling automated remediation on administration nodes, such as a cluster login node, is not recommended. Some of the automated remediation solutions can take the node offline and, if set on an administration node, could interrupt cluster availability.

Use ARS for visibility into real-time cluster operational status as well as historical logs of automated actions. See Monitor Node Remediation for details.

ARS integrates with the Slurm workload scheduler. Additional workload scheduler integration is planned for a future release. Contact Penguin Computing to learn more.

Node Health States#

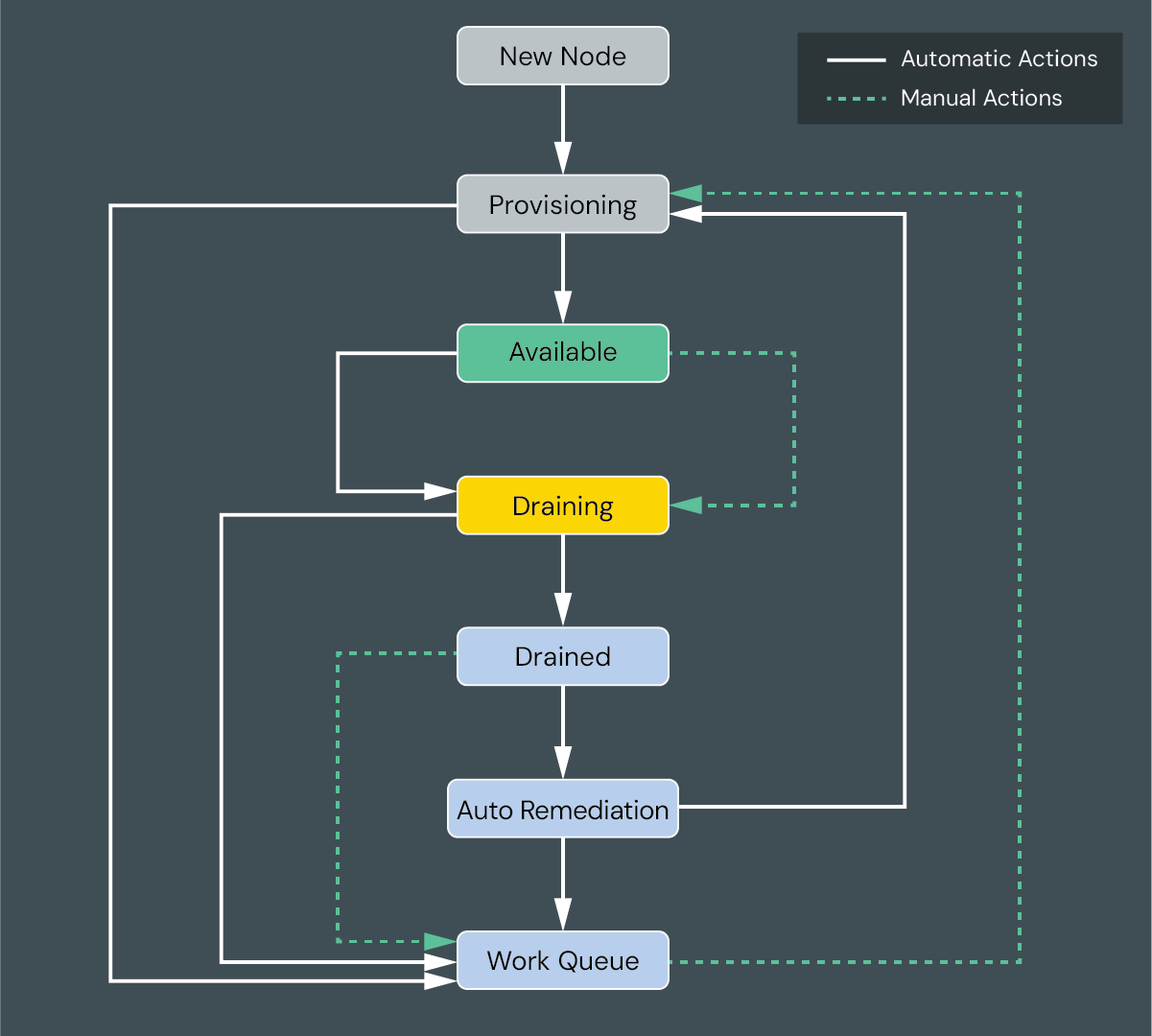

Compute nodes with the _ars_state reserved attribute (required by ARS) have a pre-configured state map. Automated transitions between states are handled via the remediation state machine (RSM). The following diagram illustrates the state map and possible transition points. The solid lines represent automated transitions between states, such as when an error is detected. The dotted lines represent manual state changes, such as requesting a manual drain for a node.

All monitored nodes start in the New Node state before they are powered on.

When a node is powered on, it moves to the Provisioning state where the ClusterWare software runs a series of health checks. Nodes remain in the Provisioning state until all health checks pass.

Once all health checks pass, a node moves to the Available state where it can run jobs. Nodes remain in this state for most of their lifespan.

If Sensu detects an issue on a node, it emits a MQTT message that is picked up by the ClusterWare software. The ClusterWare software places the node into Draining and Drained states, allowing currently running jobs to complete before the node is taken out of service.

After a node is fully drained, it moves to the Auto Remediation state. The ClusterWare software reviews the Sensu event data to determine the issue or issues identified, finds the best solution to fix the issue or issues, and runs an automated remediation plan based on the selected solution.

If the issue is resolved by the automated remediation, the node moves back to the Provisioning state to be re-tested and re-deployed to the cluster. If the automated remediation did not fix the issue, the node is moved to the Work Queue state and the cluster administrator should attempt other remediation solutions.

Default Node Health Checks#

The following health checks are run on nodes by default when ARS is enabled:

check-system-errors: Looks for problem patterns over a time period.check-fs-capacity: Reviews disk and inode usage over a time period.check-slurmd: Checks that slurmd is running on the node.check-ntp: Verifies that node time has not drifted.check-zombie: Ensures the number of zombie processes is within an acceptable threshold.check-ethlink: Verifies that at least one interface is up, there are no bad counters, and there are no flapping links.

Automated Remediation Example#

A compute node is currently in the Available state and is running a job via Slurm. However, the node emits an error indicating that one of the mount points is inactive. The following automated process begins:

Sensu detects the mount point issue and logs a

check_mount_availabilityevent.The ClusterWare software detects the Sensu event and instructs the node to drain as soon as the active job finishes.

The node completes the job and moves through the Draining and Drained states.

After the node is fully drained, it moves to the Auto Remediation state where the ClusterWare software reviews the Sensu event log and evaluates potential solutions. In this case, the selected remediation plan is to restart the node.

The ClusterWare software restarts the node and the node moves to the Provisioning state to be re-tested.

The health checks pass and the node is moved back to the Available state and can pick up a new job.

Only a single error was detected in this simple example. However, if more than one error occurred, then ClusterWare considers multiple solutions. The different remediation plans are evaluated for severity of the issue, impact to cluster operation, and confidence that they are effective to resolve all of the problems. See Monitor Node Remediation to learn more.

Moving Nodes to and from the Work Queue#

If the applied remediation plan does not solve the issue, the problem node is automatically moved to the Work Queue for a cluster administrator to take action. After solving the problem, the node should be moved back to the Provisioning state to be re-tested and re-deployed to the available pool of nodes.

The mqttpub.sh script is used to manually move nodes between states,

including from the Work Queue to Provisioning. The script must be run by root.

Three arguments are required:

Node name

Transition name, possible values include:

human_intervention: transition from Work Queue to Provisioningmanual_drain: transition from Available to Work Queue

Log message

To move a remediated node from Work Queue to Provisioning, use the

human_intervention transition:

/opt/scyld/clusterware-ars/mqttpub.sh <node name> human_intervention "<message>"

For example, node n1 was fixed after a manual reboot and is ready to be moved to the Provisioning state:

/opt/scyld/clusterware-ars/mqttpub.sh n1 human_intervention "Node rebooted"

In some cases, a node requires manual maintenance even if an error was not

detected. For example, if a node is scheduled for a hardware upgrade. You can

manually move a node from Available to Work Queue using the manual_drain

transition:

/opt/scyld/clusterware-ars/mqttpub.sh <node name> manual_drain "<message>"

With the manual_drain transition, the node moves from Available, to

Draining, to Drained, directly to Work Queue, bypassing the Auto Remediation

state.